Disclaimer: I’m not an ML expert and not even a serious ML specialist (yet?), so feel free to let me know if I’m wrong!

It seems to me that we have hit a bit of an “on-premises” vs. “on-premise” situation in the ML/AI and vector search terminology space. The majority of product announcements, blog articles and even some papers I’ve read use the term vector embeddings to describe embeddings, but embeddings already are vectors themselves!

An embedding is a vector of scalar values (floats). So it’s correct to say embedding vector as it is a vector (1-dimensional array) of scalar values. These scalar values define where your item of interest “falls” during inference in the N-dimensional space that was constructed when your ML model was trained. But when one says vector embedding, this technically implies a vector of embedding vectors! A vector of vectors would be a 2-dimensional matrix then.

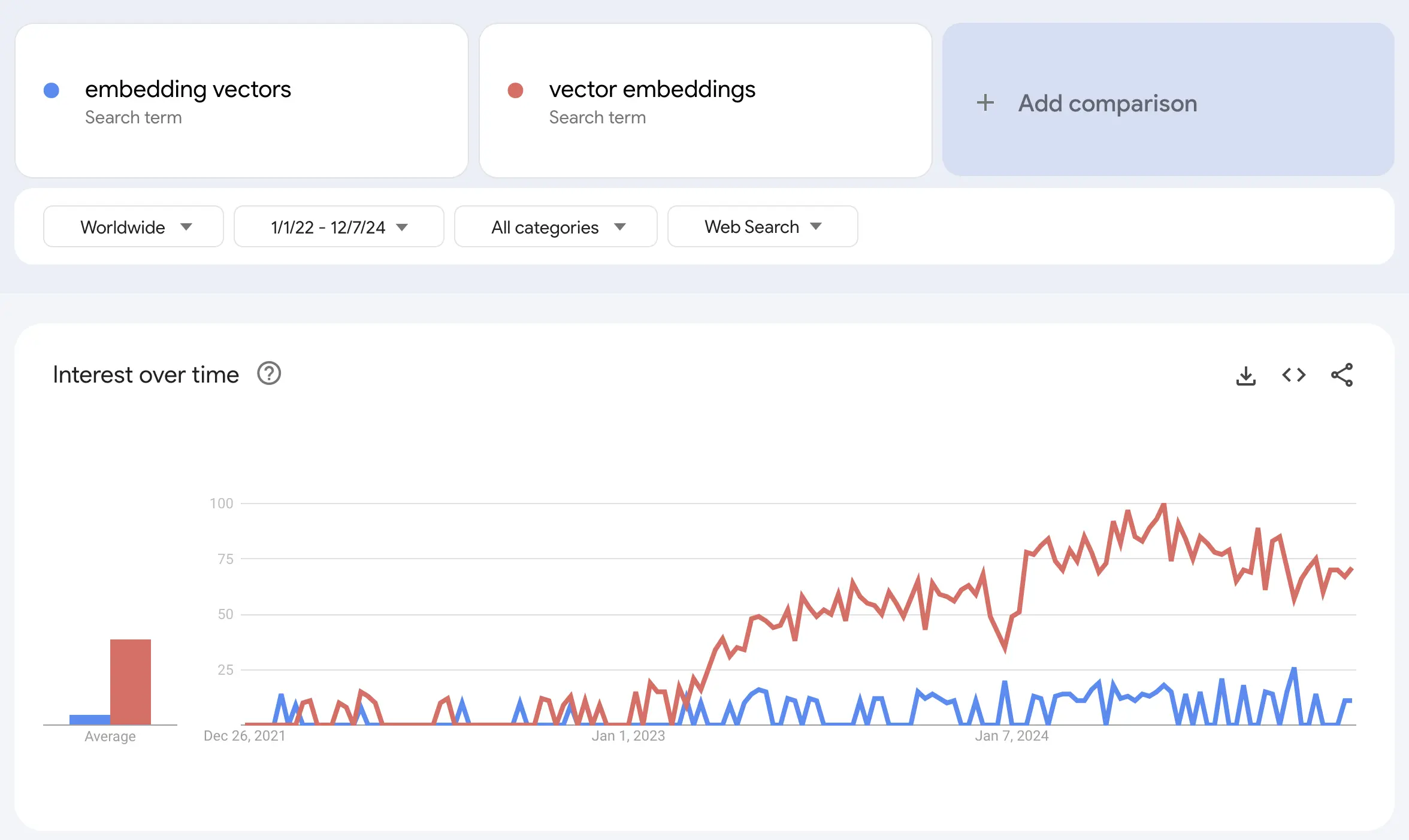

No doubt everyone understands what is being referred to either way, but it slightly bothers me. It’s just like saying “on premise” which is not at all the same thing as “on premises”, even if it sounds the same. Here’s a Google Trends screenshot, so I think the cat is well out of the bag by now:

I think I understand why the “vector embeddings” term has ended up going mainstream, it somehow is just smoother to say, write and read. But it still mildly bothers me whenever I see it written that way somewhere, just like the “on-premise” thing. At some point I wasn’t even sure myself (as I said, not an expert), because plenty of research papers published use the “wrong” terminology too.

Or, maybe I’m just looking at this from the wrong angle. Perhaps there’s a theoretical possibility of using scalar embeddings somewhere (dimensions = 1, vector length = 1), but anything multidimensional could rightfully be called a vector embedding then?

Book: Build a Large Language Model from Scratch

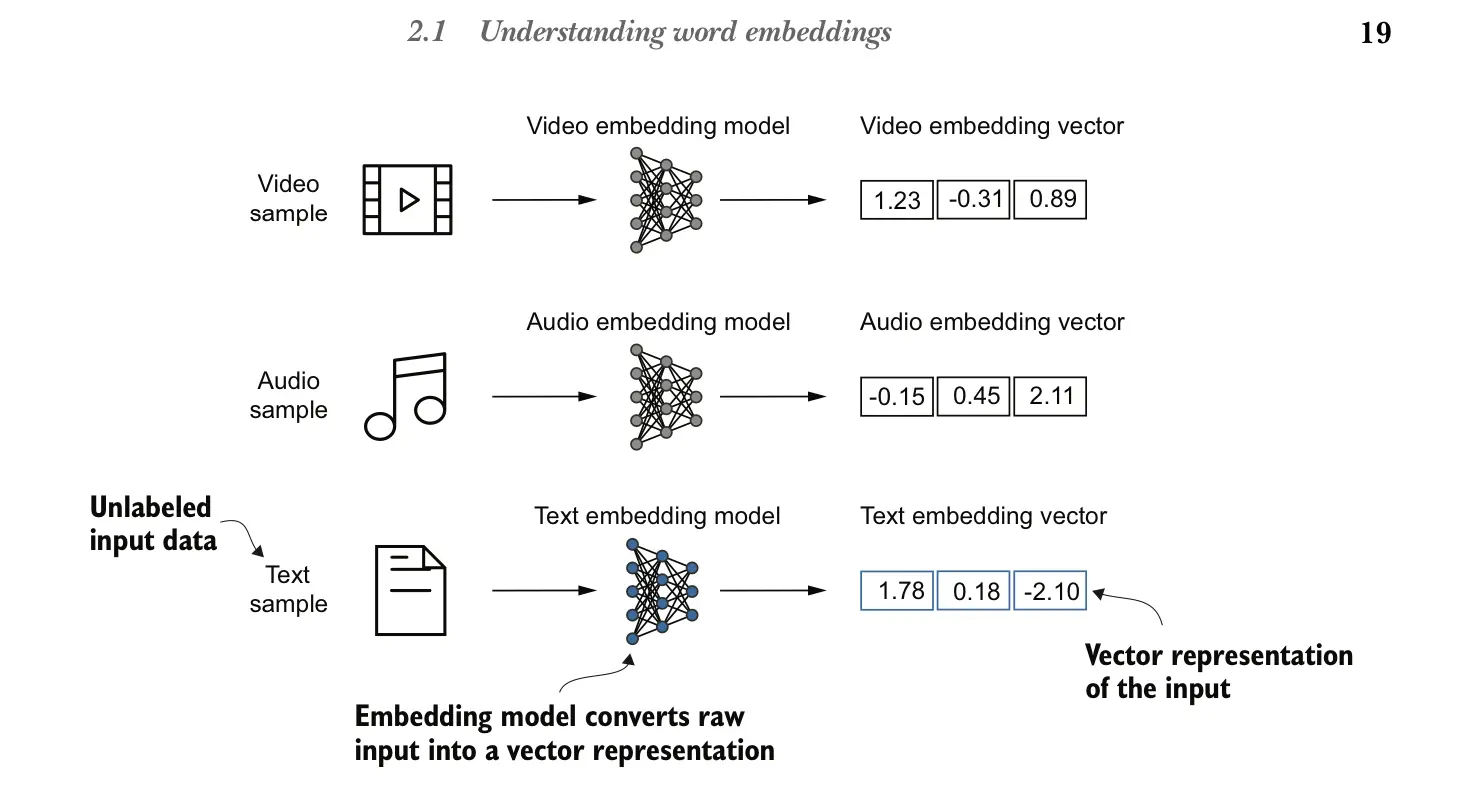

However, what gave me the confidence that I might be right after all (yay!) and prompted writing this blog post, is Sebastian Raschka’s book Build a Large Language Model (from Scratch) that I just started reading and enjoy.

I was immediately relieved to see that Sebastian has used the proper term embedding vector in the explanations and diagrams too! I’m pasting one screenshot from the book (hopefully it’s fair use):

So, I’m happy to conclude that embedding models produce embedding vectors, not vector embeddings! :-)

More Vector Stuff

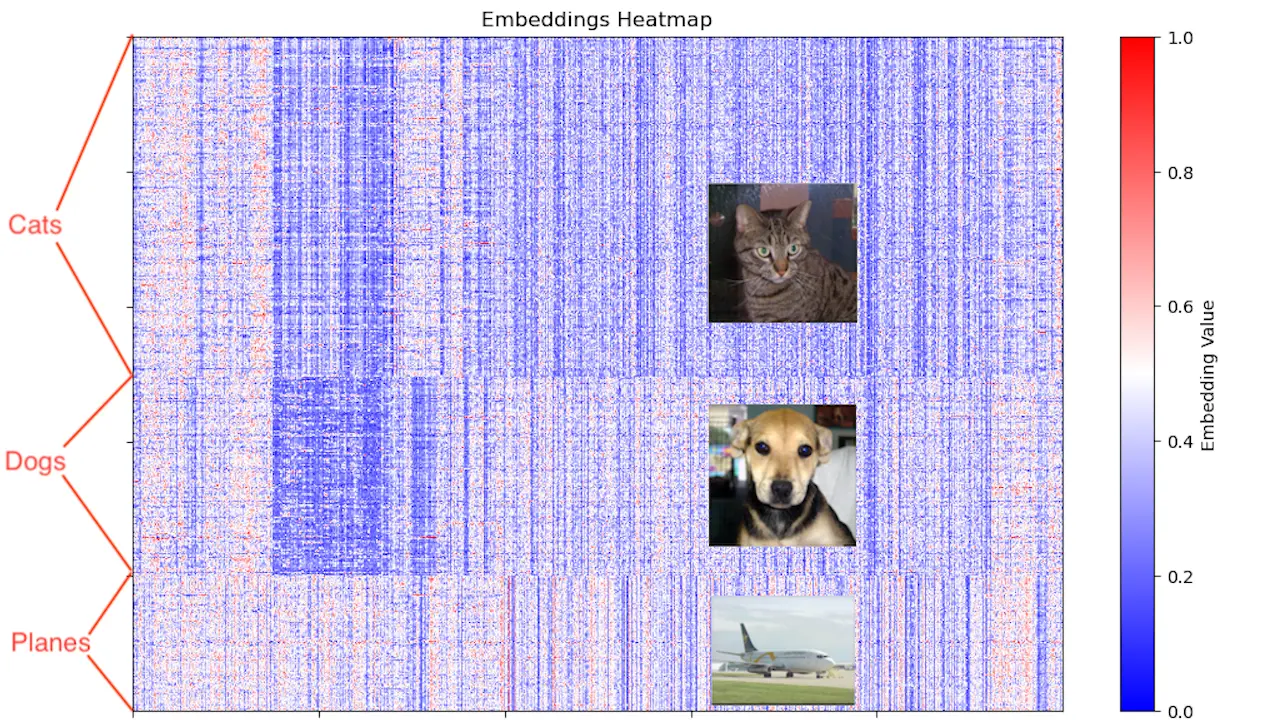

When I want to learn something new, I can’t help it but start from the lowest layers (say, hardware, CUDA, matrix multiplication in this case) and work my way into the upper layers from there. So I had some fun with visualizing some of the embedding vectors from the Kaggle Cats & Dogs dataset and added some planes in too.

These exploratory blog posts will get nowhere near explaining how ML inference or vector search work, but it was still a fun exercise, and the source code is available in GitHub. I’ll add more posts as I learn things myself: