Did you know that there’s something like Active Session History also in the Exadata storage cells? ;-)

The V$CELL_THREAD_HISTORY view is somewhat like V$ACTIVE_SESSION_HISTORY, but it’s measuring thread activity in the Exadata Storage Cells:

SQL> @desc v$cell_thread_history

Name Null? Type

------------------------------- -------- ----------------------------

1 CELL_NAME VARCHAR2(1024)

2 SNAPSHOT_ID NUMBER

3 SNAPSHOT_TIME DATE

4 THREAD_ID NUMBER

5 JOB_TYPE VARCHAR2(32)

6 WAIT_STATE VARCHAR2(32)

7 WAIT_OBJECT_NAME VARCHAR2(32)

8 SQL_ID VARCHAR2(13)

9 DATABASE_ID NUMBER

10 INSTANCE_ID NUMBER

11 SESSION_ID NUMBER

12 SESSION_SERIAL_NUM NUMBER

It keeps about 10 minutes worth of samples of Exadata Storage Cell thread activity:

SQL> @minmax snapshot_time v$cell_thread_history Show min/max (low/high) values in column "snapshot_time" of table v$cell_thread_history... MIN(SNAPSHOT_TIME MAX(SNAPSHOT_TIME ----------------- ----------------- 20130419 14:42:15 20130419 14:52:54

Note that it’s not the V$ view or the database instance which stores this array – it’s the storage cells themselves. If you query the v$cell_thread_history view, your Oracle database session is going to “gather” this instrumentation data from all the required cells and present it to you, that’s why the “cell statistics gather” wait event shows up:

PARSING IN CURSOR #140596385017248 len=42 dep=0 uid=0 oct=3 lid=0 tim=1366404896817011 hv=4063158547 ad='19e452578' sqlid='63awy1gg t2xs8m' select count(*) from v$cell_thread_history END OF STMT PARSE #140596385017248:c=0,e=115,p=0,cr=0,cu=0,mis=0,r=0,dep=0,og=1,plh=4272803188,tim=1366404896817010 EXEC #140596385017248:c=0,e=27,p=0,cr=0,cu=0,mis=0,r=0,dep=0,og=1,plh=4272803188,tim=1366404896817127 WAIT #140596385017248: nam='SQL*Net message to client' ela= 1 driver id=1413697536 #bytes=1 p3=0 obj#=40 tim=1366404896817152 WAIT #140596385017248: nam='cell statistics gather' ela= 283 cellhash#=0 p2=0 p3=0 obj#=40 tim=1366404896818846 WAIT #140596385017248: nam='cell statistics gather' ela= 352 cellhash#=0 p2=0 p3=0 obj#=40 tim=1366404896819317 WAIT #140596385017248: nam='cell statistics gather' ela= 376 cellhash#=0 p2=0 p3=0 obj#=40 tim=1366404896820929 WAIT #140596385017248: nam='cell statistics gather' ela= 326 cellhash#=0 p2=0 p3=0 obj#=40 tim=1366404896822198 WAIT #140596385017248: nam='cell statistics gather' ela= 580 cellhash#=0 p2=0 p3=0 obj#=40 tim=1366404896823620 ...

Now, how frequently do the cells sample their “ASH” data:

SQL> SELECT DISTINCT (snapshot_time - LAG(snapshot_time)

OVER (ORDER BY snapshot_time)) * 86400 lag_seconds

FROM (SELECT distinct snapshot_time

FROM v$cell_thread_history WHERE cell_name = '192.168.12.3');

LAG_SECONDS

-----------

1

Looks like the sampling is done exactly once per second!

So, great, what can we do with this data?

Here’s an Exadata performance script (cth.sql) which uses V$CELL_THREAD_HISTORY to dig into cell activity from the database layer.

First, I must warn you, the CTH (Cell Thread History) is not as powerful as the Database-level ASH (ASH has almost 100 columns in it already, but the v$cell_thread_history has only 12) and there seem to be some shortcomings in the instrumentation data, as you will see.

But it still allows us to drill down a bit deeper into Exadata storage cell activity straight from your favorite database access tool. And it also has database session-level details in it – which means this information can be used for systematic Exadata performance troubleshooting too!

As the database sessions do communicate the SQL_ID of the currently active SQL statement to the storage cells when sending them requests, we can list the TOP activity by SQL_ID inside the storage cells!

The cth.sql script takes 4 arguments:

- The columns to group the CTH activity data by

- The filter settings (which sessions, instances, sql_ids to report?)

- The begin time range to report

- The end time range to report

Note that the CTH view will only have about 10 minutes of history in it (unless the cells are almost idle). For longer-term analysis you might want to gather the history to some table every couple of minutes.

Also, the snapshot_time column shows the CELL time, so if there’s significant clock drift between the storage cells and DB nodes, you might measure the wrong range of data.

Anyway, let’s see the “TOP SQL” in all storage cells:

SQL> @exadata/cth sql_id 1=1 sysdate-1 sysdate

Querying V$CELL_THREAD_HISTORY ("ASH" for Storage Cells) ...

SECONDS SQL_ID

---------- -------------

1755

977 akbpwk3j38vw7

146 25y04n1twszyu

135 98pza6kpfwczj

14 5n83a4q202674

12 0w2qpuc6u2zsp

12 147a57cxq3w5y

7 axdkf5tjztmy7

6 apgb2g9q2zjh1

2 dcq9a12vtcnuw

2 a9gvfh5hx9u98

1 f318xdxdn0pdc

1 0ws7ahf1d78qa

13 rows selected.

Immediately we see that majority of the time consumed does not have any SQL ID associated with it, but we will get back to this later. For now, let’s pick the TOP SQL_ID and drill down into it further, by grouping the data by some more columns:

SQL> @exadata/cth sql_id,job_type,wait_state,wait_object_name sql_id='akbpwk3j38vw7' sysdate-1 sysdate

Querying V$CELL_THREAD_HISTORY ("ASH" for Storage Cells) ...

SECONDS SQL_ID JOB_TYPE WAIT_STATE WAIT_OBJECT_NAME

---------- ------------- -------------------------------- -------------------------------- --------------------------------

868 akbpwk3j38vw7 PredicateFilter working

52 akbpwk3j38vw7 PredicateDiskRead working

45 akbpwk3j38vw7 PredicateCacheGet waiting_for_SKGXP_send

7 akbpwk3j38vw7 CacheGet working

6 akbpwk3j38vw7 PredicateCacheGet working

3 akbpwk3j38vw7 CacheGet waiting_for_SKGXP_send

2 akbpwk3j38vw7 PredicateDiskRead waiting_for_mutex gdisk ext

2 akbpwk3j38vw7 CacheGet waiting_for_mutex RemoteSendPort

1 akbpwk3j38vw7 PredicateFilter waiting_for_mutex PredicateDisk-1

1 akbpwk3j38vw7 PredicateCachePut working

Now we have a breakdown of the storage cell thread activity, when (knowingly) doing work for the SQL_ID akbpwk3j38vw7. The job type shows the “phase” of execution in the data flow inside a storage cell. I think I need to write a separate post about the cell execution phases, but for now, the PredicateDiskRead, as the name says, deals with setting up the IOs for disk reads, the PredicateCacheGet is what deals with the (smart) IO requests handed to storage cells by DB sessions (and sending the results back too) and the PredicateFilter is the phase which does the actual processing of just read datablocks in storage cells (logically “opening” the block and passing it through cache, transactional layers to the data layer functions, which extract the required columns of the matching rows of any predicates offloaded to the storage cells). If you wonder where are the I/O wait states – it looks like for (at least) smart scans, thanks to asynchronous I/O the actual I/O waiting/reaping is done by separate threads, which do not “belong” to a single SQL_ID, but track the completion of all I/Os submitted by all threads independently. Cellsrv is a single (multithreaded) process, so it’s easy to separate tasks within the process. This is also how you avoid keeping all 100+ cellsrv threads “occupied” in case of slow IO.

The wait state column is more complicated than the database layer’s session wait state “waiting or not waiting” logic. There are no separate wait events listed in the CTH, but the wait state itself contains the reasons for some waits. Working means ON CPU (or trying to be on CPU if the OS scheduler hasn’t put the thread there yet) and all the waiting_ states, like the name says, mean waiting for something, like a next request over the network (waiting_for_SKGXP_receive) or asynchronous IO completion (waiting_for_IO_reap). Note that Exadata cellsrv architecture is radically different from what you’re used to from the Database world, it’s a multithreaded job queuing and scheduling engine where one thread may submit IOs and some completely different thread will be the one who checks for the asynchronous IO completion (IO reaping), while a yet another thread does the actual filter processing (offloaded execution) tasks. So don’t expect the cellsrv threads serving your smart scans to actually wait for any I/O, because there are independent threads doing I/O reaping for all incoming requests. If you want more deep details – I do talk about the cellsrv internals and data flow in my Advanced Exadata Performance seminar and also Roger MacNicol from Exadata Development will deliver an Exadata SmartScan Deep Dive presentation at Enkitec E4 in August this year (2013) ;-)

Here’s the (probably) full list of wait states from Oracle 11.2.0.3 BP11 running on Cellsrv 11.2.3.2.1)

initial working waiting_in_scheduler waiting_for_mutex waiting_for_condition_variable waiting_for_IO_reap waiting_for_SKGXP_receive waiting_for_SKGXP_send looking_for_job waiting_for_msg_hdr waiting_for_system_work waiting_for_offline_done waiting_for_storidx_lock waiting_for_issued_ios waiting_for_buffer_allocation waiting_for_connect waiting_for_flashcache_init waiting_for_io_quiescence waiting_for_io_draining

Here’s another example, where I show all activity in all storage cells, by SQL_ID (if known), the database_id, instance_id and the session_id of the database session:

SQL> @exadata/cth sql_id,database_id,session_id,job_type,wait_state 1=1 sysdate-1/24/60 sysdate

Querying V$CELL_THREAD_HISTORY ("ASH" for Storage Cells) ...

SECONDS SQL_ID DATABASE_ID SESSION_ID JOB_TYPE WAIT_STATE MIN(SNAPSHOT_TIME MAX(SNAPSHOT_TIME

---------- ------------- ----------- ---------- -------------------------------- -------------------------------- ----------------- -----------------

1301 0qmhpg330zqfu 1538629110 2916 PredicateFilter working 20130514 09:20:11 20130514 09:21:10

313 0qmhpg330zqfu 1538629110 5 PredicateFilter working 20130514 09:20:11 20130514 09:21:10

242 0qmhpg330zqfu 1538629110 203 PredicateFilter working 20130514 09:20:11 20130514 09:21:10

73 0 0 UnidentifiedJob waiting_for_IO_reap 20130514 09:20:11 20130514 09:21:10

9 0 0 UnidentifiedJob working 20130514 09:20:13 20130514 09:20:58

4 1538629110 972 CachePut working 20130514 09:20:17 20130514 09:21:04

3 0 0 NetworkRead working 20130514 09:20:17 20130514 09:20:34

2 1538629110 584 CachePut working 20130514 09:20:13 20130514 09:20:59

2 1538629110 492 CachePut working 20130514 09:20:26 20130514 09:20:52

2 0qmhpg330zqfu 1538629110 5 PredicateDiskRead working 20130514 09:20:58 20130514 09:20:58

1 0 0 IO poor performance detection th 20130514 09:20:23 20130514 09:20:23

1 0 0 ProcessIoctl working 20130514 09:20:26 20130514 09:20:26

1 147a57cxq3w5y 1538629110 2140 CacheGet working 20130514 09:20:12 20130514 09:20:12

1 0w2qpuc6u2zsp 1538629110 1479 CacheGet working 20130514 09:21:04 20130514 09:21:04

1 0w2qpuc6u2zsp 1538629110 986 CacheGet working 20130514 09:20:44 20130514 09:20:44

1 0 0 System stats collection thread working 20130514 09:20:41 20130514 09:20:41

1 147a57cxq3w5y 1538629110 790 CacheGet waiting_for_SKGXP_send 20130514 09:20:29 20130514 09:20:29

1 apgb2g9q2zjh1 1538629110 1280 CacheGet working 20130514 09:21:01 20130514 09:21:01

1 apgb2g9q2zjh1 1538629110 1085 CacheGet working 20130514 09:21:02 20130514 09:21:02

1 4075070345 1177 CacheGet working 20130514 09:20:23 20130514 09:20:23

Notes:

- Exadata storage cell software (cellsrv) samples active thread states every second (like ASH), so every record in V$CELL_THREAD_HISTORY stands for 1 second of “cell activity time” if counted. You can COUNT the records in V$CELL_THREAD_HISTORY just like you can count them in ASH to get approximate (cell) activity time.

- You do not have to query the GV$ view as the V$ view itself returns all data from all storage cells (unless restricted by a where clause)

- There is no 1-1 correspondence of a cell thread and your database session, so don’t try to simply add these numbers up. A smart scan can asynchronously and independently work for your database session, working in advance, doing prefetching and preprocessing regardless of what your database session happens to be doing. One smart scan “session” can end up spawning multiple threads in each storage cell if the cell can not produce data fast enough than the database session can consume.

- The database_id column corresponds to the “global database ID” (

SELECT ksqdngunid FROM X$KSQDN), not the usual DBID in v$database.

A warning: There have been a few cases where my session crashes (well, actually my process, but not the database) when querying this V$ view:

SQL> @exadata/cth wait_state,sql_id 1=1 sysdate-1/24 sysdate

Querying V$CELL_THREAD_HISTORY ("ASH" for Storage Cells) ...

, MIN(snapshot_time), MAX(snapshot_time)

*

ERROR at line 5:

ORA-03113: end-of-file on communication channel

But it only has affected my own session so far, so when I log in and rerun the script, it works ok again. I yet have to file a bug for this – this is a documented V$ view and it shouldn’t crash when queried.

This post ended up quite long, so I will continue a Part 2 some day in the future :)

You can download the latest version of cth.sql from here.

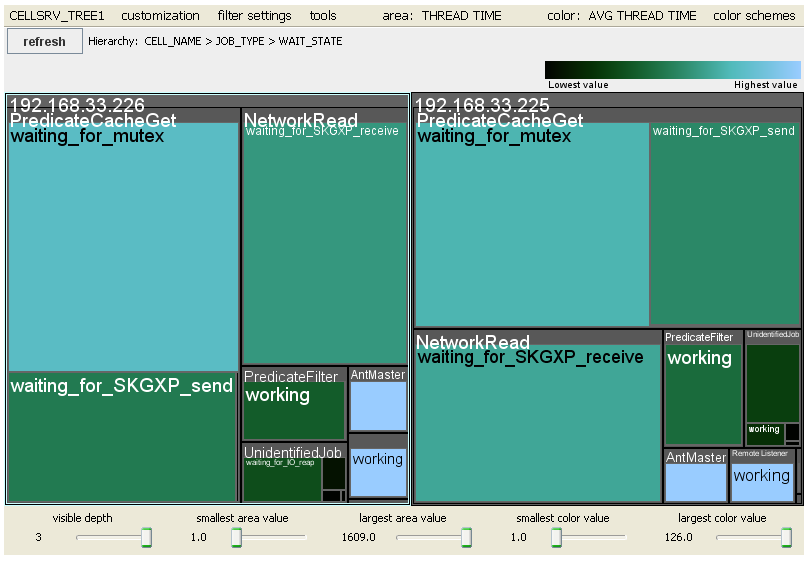

Update: John Beresniewicz from Oracle sent me a treemap visualization of the CTH data he experimented with some years ago, here’s an example: